10 Tips for Making Sense of COVID-19 Models for Decision-Making

By Elizabeth A Stuart, PhD, Daniel Polsky,PhD, Mary Kathryn Grabowski, PhD, AND David Peters, MD, DrPH

Every day there seems to be a new model of some aspect of the COVID-19 pandemic.

At a time when it can seem like our lives and livelihoods are somehow tied to what gets spit out of these COVID-19 models, it is a good time to learn a bit about what the models are made up of, and what they can—and can’t—do.

Models can be enormously useful in the context of an epidemic if they synthesize evidence and scientific knowledge. The COVID-19 pandemic is a complex phenomenon and in such a dynamic setting it is nearly impossible to make informed decisions without the assistance models can provide. However, models don’t perfectly capture reality: They simplify reality to help answer specific questions.

Below are 10 tips for making sense of COVID-19 models for decision-making such as directing health care resources to certain areas or identifying how long social distancing policies may need to be in effect.

- Make sure the model fits the question you are trying to answer.

There are many different types of models and a wide variety of questions that models can be used to address. There are three that can be helpful for COVID-19:- Models that simplify how complex systems work, such as disease transmission. This is often done by putting people into compartments related to how a disease spreads, like “susceptible,” “infected,” and “recovered.” While these can be overly simplistic with few data inputs and don’t allow for the uncertainty that exists in a pandemic, they can be useful in the short term to understand basic structures. But these models generally cannot be implemented in ways that account for complex systems or when there is ongoing system or individual behavioral change.

- Forecasting models try to predict what will actually happen. They work by using existing data to project out conclusions over a relatively short time horizon. But these models are challenging to use for mid-term assessment—like a few months out—because of the evolving nature of pandemics.

- Strategic models show multiple scenarios to consider the potential implications of different interventions and contexts. These models try to capture some of the uncertainty about the underlying disease processes and behaviors. They might take a few values of such as the case fatality ratio or the effectiveness of social distancing measures, and play out different scenarios for disease spread over time. These kinds of models can be particularly useful for decision-making.

-

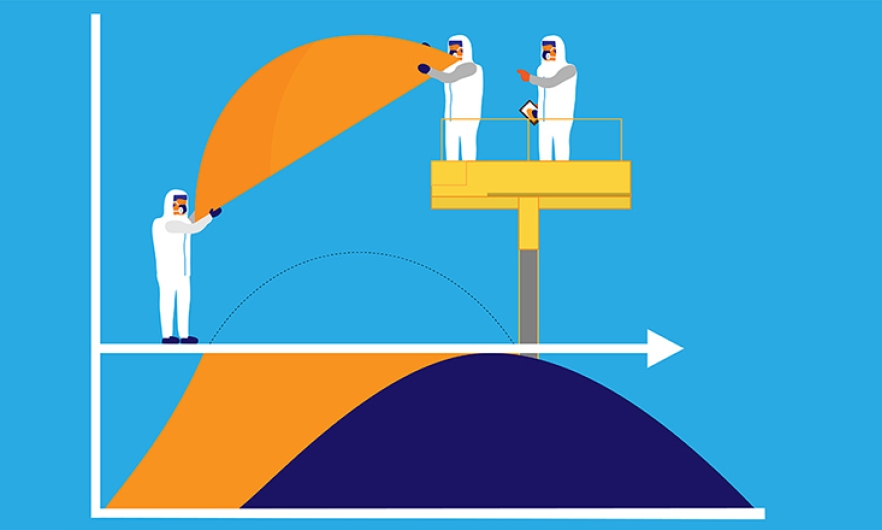

Be mindful that forecast models are often built with the goal of change, which affects their shelf life.

The irony of many COVID-19 modeling purposes is that in some cases, especially for forecasting, a key purpose in building and disseminating the model is to invoke behavior change at individual or system levels—e.g., to reinforce the need for physical distancing.

This makes it difficult to assess the performance of forecasting models since the results of the model itself (and reactions to it) become part of the system. In these cases, a forecasting model may look like it was inaccurate, but it may have been accurate for an unmitigated scenario with no behavior change. In fact, a public health success may be when the forecasts do not come to be! -

Look for models (and underlying collaborations) that include diverse aspects and expertise.

One of the challenges in modeling COVID-19 is the multitude of factors involved: infectious disease dynamics, social and behavioral factors such as how frequently individuals interact, economic factors such as employment and safety net policies, and more.

One benefit is that we do know that COVID-19 is an infectious disease and we have a good understanding about how related diseases spread. Likewise, health economists and public health experts have years of experience understanding complex social systems. Look for models, and their underlying collaborations, that take advantage of that breadth of existing knowledge. -

Consider the time frame of the models and their projections.

Incorporating subject-matter expertise from a range of areas may be particularly important when aiming to project long-term outcomes, given that we don’t yet have data on long-term outcomes for COVID-19 itself.

Instead we must rely on knowledge about the underlying mechanisms. Some approaches rely primarily on “curve fitting” to observed data, and then projecting those trends out into the future, but without modeling the underlying disease processes. These models may be appropriate for predicting outcomes a few days into the future, but can be challenging to use for predicting long-term outcomes, or under different scenarios or assumptions about behavior, such as how well social distancing measures are implemented.

In some other contexts, where that long-term data is already available—such as forecasting annual influenza rates—a more empirical approach may be more reasonable. -

Consider the (structural and data) inputs required to generate projections from the model.

A trade-off in modeling complex systems is that more complex models need either more structural assumptions (e.g., about how disease spreads) or more data. It’s important to consider the validity of both pieces: how much is known about the disease spread and other structural assumptions, and whether the data inputs are trustworthy.

Many of the data elements we wish we had are simply not available at this time, or may not be valid. With testing shortages, for example, tests may be given only to likely positive and symptomatic cases, meaning that the rates of positive tests may mean something very different in places with broad community-wide testing. Data from a specific health system may be more timely and easily obtained than waiting for death certificate data, but will not necessarily reflect the full population in an area. The relevance, quality, timeliness, and representativeness of the underlying data all need to be considered. -

Be conscious of what is in the model as well as what is NOT in the model.

Like the man looking for his keys under the lamppost, decision makers run the risk of focusing attention only on the factors included in a model.

A model focused only on hospital factors might draw focus and attention to hospital needs but ignore the broader context of the public health system or other social factors. A model focused on the effectiveness of social distancing may not consider the context of social and economic needs of the community in which social distancing is being implemented. -

Assess whether the model accounts for various sources of uncertainty.

Models rarely give “the” answer. All models will involve multiple forms of uncertainty; look for models that convey that uncertainty, such as by showing multiple scenarios and a range of possible outcomes.

This uncertainty comes in multiple forms:- Uncertainty about the underlying data informing the model—e.g., having testing data for only a portion of the population, or death data that may not be fully accurate

- Statistical uncertainty given a particular model—this is what standard statistical measures such as standard errors and confidence intervals account for

- More foundational uncertainty about certain parameters in the model—e.g., whether we have the right transmission rates or accurate estimates of the effectiveness of social distancing

- Even more foundational uncertainty about whether the right factors are in the model or the model is conceptualized correctly—e.g., not accounting for social factors or insurance status in models of behavior, or assuming that all individuals operate in the same ways, versus allowing for variation in behavior

Many of our statistical approaches and models focus only on (b). Decision makers should look for models that show some assessment of uncertainty, ideally to key data inputs, and also recognize that the models likely are still not showing the full uncertainty due to these different factors.

-

Probe the assumptions underlying the model, and look for transparency in those assumptions.

Given all of the inputs required for a model, and all of the potential complexities, look for models that are transparent about:- the underlying structure being assumed, (e.g., a particular disease transmission model or specific assumptions about social interactions),

- where the data and inputs for the model come from, (e.g., the assumptions that go into the model), and

- the confidence in the model results, as expressed through confidence intervals or analyses of sensitivity to key parameters.

-

Recognize the work required to develop a well-functioning model.

Developing a fully functioning, well-calibrated model takes time and expertise.

As noted above, there is a trade-off between complexity and data input needs. We might want a model to account for all aspects of the system: individual behavior, health care system factors, political factors, biological factors. However, each additional piece brings additional need for data, parameters, and assumptions. This might include data on the local conditions, to ensure the model is well calibrated to local areas, and also data (such as from existing research) that informs the parameter values (such as R0 in the case of COVID-19). A true art in modeling, then, is to figure out which pieces of the model are crucial, and how to account for them.

Because of this, a key step in model development is model calibration, such as by examining how well the model fits known data. Unfortunately, given the speed of the pandemic, we don’t currently have time for extensive model checking. Thus all of the modeling currently being done should be thinking about how to balance this care with efficiency and getting necessary predictions out in a timely way. -

Be choosy about which models to trust but also don’t rely too much on one model.

As described, modeling is a complex topic that requires substantive, computational, and statistical expertise. Look for models that:- Make clear their inputs, and where they are coming from

- That show uncertainty to the different pieces, both the statistical (random) uncertainty, and also uncertainty regarding key parameter values

- That incorporate a wide range of viewpoints, including the infectious disease experts, health system experts, modeling experts, and more

Finally, don’t put too much stock into any particular model. Use multiple models (or model settings) to examine a specific question and allow for different assumptions, including different model structures, or to understand different dimensions of the epidemic. While any one model will not be fully “correct,” models can still be useful. In particular, models that have transparent and empirically based assumptions can be quite useful to identify the key decision points and factors to focus on, and provide guidance on when to implement them.